Introduction

I’ve always thought it would be neat to create a digital version of oneself. The closest that programmers can get, is most likely the chat bot. Aside from chat bots being a goal towards beating the Turing Test, there may be an ulterior motive involved as well - to create a digital copy of one’s own personality, and thus, a pseudo form of immortality. We’ve come a long way in this progress (the chat bot part, not the immortality part), starting from the humble orgins of Eliza all the way to the highly configurable Alice chat bot.

Chat bots typically use case-based reasoning or similar techniques to map responses to certain keywords in a sentence. In this manner, a crude personality can be programmed, and a unique chat bot created. This is definitely an interesting exercise that I think all software developers should try, at least once. But, let’s try something a little different and apply a modern spin to the idea of an artificially generated digital persona.

Is it possible to apply machine learning to a Twitter user’s collection of tweets and accurately develop a model of the personality?

This article describes a program, using unsupervised learning with the K-Means algorithm, to automatically group a collection of tweets into distinct categories. Once the categories have been organized, the program will then locate new links that match the categories and recommend them for the persona. In the process, we’ll create a machine learning recommendation engine for Twitter that will recommend links, related to the user’s interests, and automatically identify new content.

Supervised vs Unsupervised Learning

There are two core methods for building a model from data with machine learning: supervised and unsupervised learning. Since we’re trying to determine a set of topics for a Twitter user’s personality, we could choose either method to build a recommendation engine. Let’s take a look at what’s involved for each learning type.

Supervised Learning

Using supervised learning, such as an SVM, a model could be constructed that maps articles a user likes vs dislikes. This is similar to book or movie rating services. We would record a set number of links that the user clicks on and an equal set of links that the user skips over (assume that we’re using an RSS feed of articles in this example). The SVM would learn to classify new articles as being liked or disliked, thus determining a general personality. One drawback to this approach is the sheer amount of rated data that is required, in addition to a logging mechanism to record the likes/dislikes. Since this kind of data wasn’t available at the time of this project, we’ll take the next best thing: unsupervised learning.

Unsupervised Learning

By using unsupervised learning, a program can automatically sort data (tweets) into individual organized categories. It does this by recognizing key parts amongst the text, and then partitioning the text into clusters. Once grouped, we can classify new articles as belonging to specific clusters - thus belonging to specific topics that their neighboring articles correspond to. If we find content that matches one of the core topics, we can positively identify it and recommend it for usage in the persona.

It’s important to note that while unsupervised learning can group data into clusters, it has no knowledge of what those clusters actually mean. For example, the algorithm may group a series of articles that deal with search engine optimization into a single cluster, even though it has no knowledge of SEO. As we’ll describe later on, the clusters can be labelled, and thus provide hash tags for recommended tweets.

Project Setup

A data-set was built up by extracting a history of tweets.

The tweets are digitized and then processed with the K-means unsupervised learning algorithm, which groups them together into clusters. Each cluster ideally represents a unique category of tweets. Given a typical persona, the type of tweets made and links normally posted should match a subset of common categories. After all, people usually have a specific set of interests that they talk about. If machine learning can categorize those interests into clusters, we should be able to accurately find similar articles related to those topics.

The end result will be the construction of tweets that appear as natural, and on-topic, as human-authored ones. The tweets should match the previous history of tweets, including text and hash tags.

K-Means Unsupervised Learning

The K-Means algorithm was selected, as it serves as a typical unsupervised machine learning algorithm for grouping large amounts of data. In this case, the data will be tweets.

K-Means works by starting with a specific k-value, representing how many clusters you want to identify. The algorithm then begins with a random initialization of the cluster centers (or a more strategic initialization) and groups the data into k clusters by matching the data to the cluster with its nearest mean. This process is repeated until the centroids (cluster centers) stop moving or the data stops switching clusters.

Choosing a Value for K

K is actually a pretty important variable in the K-means algorithm. For this project, various values were tried, with an optimal value found around 10. If k is too small, the result will suffer from under-fitting. That is, many unrelated articles will be grouped together in just a few clusters, poorly representing topics of interest. If k is too large, the result will be over-fitting. In this case, the articles will be separated into too many clusters, resulting in poor accuracy for categorizing new data. In the extreme case, too large of a k-value can result in an individual cluster being created for every single article, effectively eliminating any value of the clustering to begin with.

If you’re completely unsure of a k-value to try, a recommended rule-of-thumb for guessing is to use the following:

1 | function guessK(points) { |

It just so happens that the above formula ran on a history of 173 tweets recommends k = 9. When rounded up, this matches our selected value of k = 10.

Digitizing Tweets

After collecting a data-set of tweets, we’ll need to digitize the text into a machine-readable format. Several techniques exist for this, such as TF*IDF, however we’ll use a simple dictionary approach.

A vocabulary is built from the tweet data-set by tokenizing the text and using the porter-stemmer algorithm to narrow down the distinct list of words. We then digitize each tweet into an array of 1’s and 0’s, according to if the tweet contains the current vocabulary term. Finally, the data-set is converted into a multi-dimensional array of bits, with each array list having the size of the vocabulary’s length.

For example, if we’ve identified the following unique terms for our vocabulary [“bird”, “ocean”, “sky”] then each row in our data-set will be of length 3. The text “A bird is flying in the sky” will digitize into [1, 0, 1]. Similarly, the text “I like swimming in the ocean on sunny days” will digitize into [0, 1, 0].

Coding it Up in Javascript

We’ll use the K-means javascript implementation to begin writing the machine learning recommendation engine in node.js. The code for training with K-means appears as follows:

1 | var clusterfck = require("clusterfck"); |

In the above code, we begin by digitizing the tweets and porter-stemming the vocabulary. We then input the data to the K-means algorithm (clusterfck, available on npm) and obtain a collection of clusters as a result. The kmeans library also holds the centroids, which represent each point (k-value) of the center of a cluster. With the algorithm trained, we can now classify new articles that the algorithm has never before seen, and have it calculate the assigned cluster.

Visualizing the Clusters

To get an idea of how the algorithm is clustering, we can visualize the tweets by mapping the tweet text to their digitized input values, and looking at their assigned cluster. We can then display the tweets by group. A word cloud of the terms in each group helps to easily visualize clusters.

Cluster 2: machine, learning, c#, net

The above cluster has grouped together common tweets involving machine learning. You can see the most commonly displayed terms in the largest font. A suitable hash tag description for this cluster might be #machinelearning.

Cluster 7: net, asp, mvc, c#

The above cluster centers around the topic of ASP .NET and MVC. A suitable hash tag description for this cluster might be #aspnet.

Within the calculated clusters, you can see how common topics tend to group together. This allows us to classify new articles and links under a specific topic. We can also take a reasonable guess at a suitable hashtag and interest, allowing us to hand-label each cluster.

Adding Hash Tags to the Tweets

With a trained unsupervised algorithm, we can now classify new links/tweets as assigned to a specific cluster. However, we still don’t know what the clusters mean. We can provide a name for each cluster by examining the tweets within each category.

We can start by counting the most popular vocabulary terms in each cluster and listing those keywords in rank order. This can serve as a rudimentary labelling system for the clusters.

For example, a run of the algorithm produced the following clusters for my tweet history:

1 | Cluster 0: net, c#, #dotnet, framework |

Taking this a step further, we can tie the results to a MongoDb table and allow for customizing of the cluster keywords. This allows us to specify our own keywords to override the automatically selected ones. The following JSON from the MongoDb table shows an example of customizing the centroid groups from a run of the K-means algorithm.

1 | { |

Dropping the Biggest Cluster

You’ll probably notice that one of the clusters contains a lot more tweets than the others. This cluster is usually a broad mixture of tweets that didn’t fit into a particular sub-category or that didn’t contain enough related tweets to group. I like to consider this cluster the “junk” cluster. We can discard new articles that are assigned to the junk cluster during classification of new tweets. This will help us collect a set of more targeted tweets for our persona.

Saving the Brain, Rinse, and Repeat

Due to the random nature of K-means, you’re bound to get different groups of clusters each time the algorithm is ran. Due to this, it’s best to run it several times until a desired grouping is achieved. Once complete, the centroid values can be saved to the database in JSON format, along with the assigned cluster keywords.

When we’re ready to classify a new set of articles, we load the values from the database and restore the centroid values.

1 | var kmeans = new clusterfck.kmeans(); |

Loading Fresh Content Through RSS Feeds

Our machine learning solution is almost complete. We now need a source of data to begin classifying new articles into our keyword-decorated assigned groups. We can parse some basic RSS feeds to find suitable content. As mentioned above, any articles that classify into the junk cluster, we’ll simply ignore. All other clusters we can accept and recommend for posting.

Url Shortening with Google

When we find a new tweet that classifies into an acceptable cluster, we’ll first need to shorten the url. We can do this with the Goo.gl url shortening service via the googleapis node.js library. This library supports OAUTH2, making it convenient to shorten urls, post, and keep track of them from your Google account.

Twitter API

We’re almost finished. We can automatically post the text and shortened link on Twitter with the node-twitter-api library. Since we want to avoid posting duplicates, we’ll save each tweet to a MongoDb document and check against this database before classifying new links.

Results?

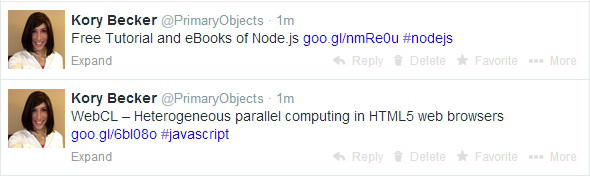

In the example below, the recommendation engine identified two articles for the persona, including auto-selection of hashtags.

Behind the scenes, each recommended article was classified under a specific topic (cluster 8 and 9), thus providing the hashtag category. Note, the hashtag appended to each tweet does not exist within the original article title text, as they were derived automatically from the cluster labels.

Keeping Track of What our Machine-Mind is Thinking

Upon automatically identifying new content of interest, each record is stored on MongoDb to serve as a history and means to prevent duplicates. Notice, in the example record below, our program identified the “Text” as belonging to Cluster 8 (which includes the top terms: javascript, source, code) and automatically appended the hashtag “#javascript”, even though this term doesn’t exist in the original text.

1 | { |

Conclusion

The result ended up creating a fairly realistic categorization of a user’s tweet history. As described above, the ten identified clusters accurately reflected a typical set of links that would be tweeted. By sourcing new data from a list of select RSS feeds, the algorithm was able to pick and choose new articles from the content and match them against the identified categories.

The automatic url shortening and selection of hashtags seemed to work well, adding an additional realistic effect. This is especially true when the hashtag selected is not even found within the literal text of the tweet itself.

Although specific technology-oriented RSS feeds were being used to source content, it would also be interesting to see how other types of content fit the clusters and if the selections remain on topic. An additional consideration might be how to identify new topics, and perhaps even reply to direct messages. Of course, we always have the chat bot for that.

About the Author

This article was written by Kory Becker, software developer and architect, skilled in a range of technologies, including web application development, machine learning, artificial intelligence, and data science.

Sponsor Me