Introduction

Is computer software ready to be controlled by speech? For decades, we’ve been building software with graphical user interfaces, allowing users to select, point, and click their way through applications. Recently, momentum has been building around conversational-based user interfaces, where users communicate with software through an almost human-like conversation type of exchange. Aside from the aspect of holding a conversation with your computer, the idea of conversational UI is more about allowing software to handle the orchestration of complex or numerous tasks.

Of course, the conversational UI seems awfully similar to a chat-bot. In fact, the chatbot is nothing new. We’ve seen our share of Eliza psychologist chatbots, IRC eggdrop and XDCC bots, Turing test contestants, and many more. However, there are some distinguishing differences between the classic chatbot and a conversational UI, with the key concept being productivity.

In this article, we’ll discuss what makes a conversational user-interface important. We’ll also walk-through a simple example of a conversational UI that accesses news stories for users in Slack.

Why Conversational UIs?

Where exactly did conversational UIs come from? They have a humble origin from command-line interfaces in legacy terminal programs. They’ve also appeared in many games, sometimes even simulating an interaction with a futuristic computer system. The key idea is to allow users to have a natural exchange with the computer in order to get work done. This type of UI is growing in demand, mainly due to usability. There are a couple of key drivers that are guiding this transition in interface design, the first of which, is mobile.

Mobile

The most obvious change in software has been the emergence of mobile computing. With less screen real-estate, touch/tapping in place of keyboards, and briefer interaction, it’s become more important to allow the user the ability to get work done with less interaction. This largely leads to less point and clicks and more interpretation.

On mobile devices, users are familiar with texting. Messaging, chat, and quick text instructions are common. This enables a natural transition towards controlling software in the same manner. Along the same lines, it’s not just mobile that is pushing users in this direction, it’s the medium itself.

Medium

Along with mobile devices, where users are familiar with messaging and chat, there are other similar growing mediums, such as messaging platforms like Slack, and speech platforms like Apple Siri and Amazon Echo. These type of environments foster chat-like interaction, making it natural to communicate with software via natural language. Once you tie in speech to text, this becomes even more powerful.

Microservices

In addition to the platform fostering conversational UI, there has also been a change in computing. In the early days of IRC, chat bots were used extensively. They usually provided utility functions such as managing a channel, adding/removing user permissions, managing files, and occasionally telling a joke. As common as they were, the popularity of chatbots diminished as users began shifting to the early web.

However, today there has been an explosion of microservices. A web service can be found for many different kinds of tasks. This type of interconnectivity provides a perfect background for harness by a conversational UI. Where you might typically have a web site graphical interface calling background web services, you can now have chatbots making the same calls on behalf of the user.

Artificial Intelligence

Of course, another powerful improvement toward chatbot usability has been the advances in artificial intelligence. Natural language processing, speech to text, text to speech, and vision recognition have the potential to enhance chatbots even further. It’s possible to ask chatbots complex questions, such as the contents or categorization of an image, and receive back an accurate reply. This brings a more immersive experience to the idea of communicating with software.

A Conversational UI for Accessing News

The following chatbot was developed for the 2016 AP Technology Innovation Summit, using the node.js library chatskills. The chatskills library allows building a bot using Amazon Alexa styled skills and intents.

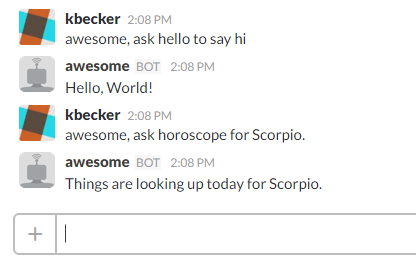

The above chat session is captured from Slack. In the example, the user is interacting with a conversational UI chatbot. Notice, the first query issued by the user:

1 | "ap, ask epix how many photos were sent by John Doe in the last 36 hours?" |

We can identify several key parts of this query, which will be interpreted by the chatbot. The first is the keyword, “ap”. This is the username of the bot, and it acts as an activator to let the bot know to listen to this message

The second phrase is “ask epix”. The command “ask” identifies that a namespace is about to be announced, which, in this case is “epix”. This tells the bot to switch the conversation context to be about the “epix” service. Now, the bot knows which service to access information from for the current, and subsequent, requests.

Finally, the remainder of the sentence “how many photos were sent by …” identifies the query itself. The bot will access the epix service to execute the query and return a response. In this case, the bot queries against a database to execute a search with the parameters found in the sentence. The bot replies with 18 (database records) as an answer.

A Note on Namespacing

In this first example sentence we’ve utilized a namespace. Namespaces allow us to separate logic and tasks, which might re-use similar conversational text keywords. By namespacing services, we can allow other services to use the same keywords, while still identifying which service we’re currently working in.

A bot might have many different namespaces. In addition to the epix service, perhaps it may also have a photos service, a users service, and an orders service. Each service may be activated by requesting it from the bot, in the form, “ap, ask users to locate the last logged-in user”, etc.

Remembering Conversational Context

1 | "show the first photo" |

The second query by the user is, “show the first photo”. Notice, the user did not mention the bot’s name, nor did they ask for a service namespace. The user simply made a new query against the current subject. In this case, the subject is the search results returned from the prior query. The bot holds these search results within a session for the current namespace context.

Note, different users can have different sessions and contexts active at the same time. It’s up to the bot to handle these sessions accordingly.

By remembering the topic of a conversation, the chatbot can provide quicker and easier access to complete tasks. Without having to re-mention the original topic in subsequent queries, the user can get the bot to execute related services on the current subject.

Tracking the Conversation Session

Conversational context is managed by using a state machine, in the form of session variables. Within each request service, we store the result of a query in a session variable. Subsequent requests can check the session contents to identify context of a search result or other data. Additional session variables may be used, such as an integer, which could correspond to a particular step in a sequence of requests. In this manner, a chatbot could guide a user through a series of questions, incrementing the state variable each time, and collecting necessary parameters to form the back-end query.

Here’s an example from the funny.js chatbot skill. This skill tells a “knock knock” joke to the user, and manages state via a control variable. As the conversation progresses, the state is incremented and the chatbot responds with the next line.

1 | function(req, res) { |

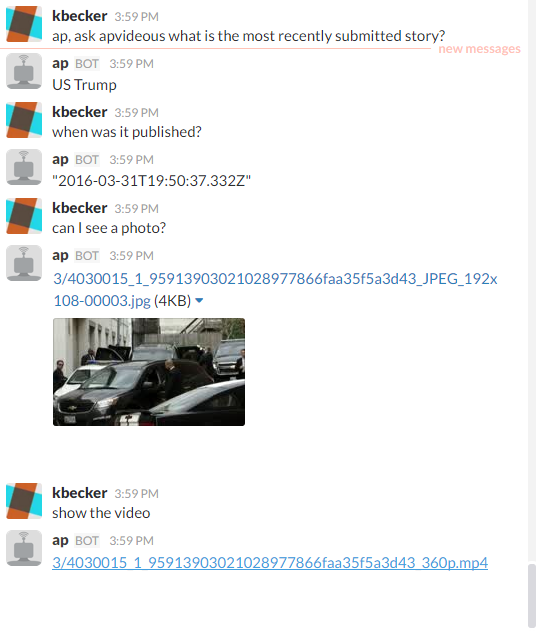

Responding With Media

Notice in the original example, after the second user query, the chatbot responds with a photo. Conveniently, the image gets embedded within the Slack channel. This photo is actually the first search result within the array of results, requested by the user in the first query. The user could also ask for the second, third, etc photo, in which case the bot will return the specified index from the array in the current session.

The final query by the user asks for the date of a more specific context item. This time, the user is asking about the specific photo that the bot just displayed. Again, we’re using session context, but narrowing it down from the array of results, to a specific item within the array. The bot is then able to respond with a date/time for the photo.

Manipulating Context

At this point, the subject of the conversation is a specific photo from the set of results (specifically, the first photo in the array). If the user were to take a step back in the context, he could ask for a different photo from the results.

The user might request, “what’s the third photo?”. The bot’s handler for this phrase must identify which session context item to query against. In this case, it knows that the user is asking for a photo within a list of results, so it’s able to query against the original search results array that was returned from the very first request. This, in turn, changes the context of the conversation to be about the third photo result.

Changing the Subject of the Conversation

When a user is ready to access a different service from the bot, they can request a different skill and then issue a query.

In this example, the user has requested a new query against the “apvideous” namespace. The bot will drop the current conversation context and switch to the new service. It matches the text against an intent within the service, which runs a query against a different database. The bot then responds with a result, in this case, “US Trump”.

The subsequent conversation follows the same pattern as the first example, with the user asking questions against the current conversation topic: “when was it published?”, “can I see a photo?”, “show the video”, etc.

Skills and Intents

We’ve just taken a look at a conversational UI with a chatbot. Now, let’s take a step deeper to see how the bot actually works.

Since our bot is using the chatskills library to build a conversational UI, it works by using skills and intents. A “skill” is a namespaced service, such as “epix” in this example. An “intent” is a handler for sentences within the skill. For example, “how many stories were sent by [name] in the last [hours] hours?”. This sentence will trigger an intent, which knows to parse out the name and hours variables for execution in the query against the database.

Here is an example of creating a skill.

1 | var chatskills = require('chatskills'); |

Here is an example of creating an intent for this skill:

1 | epix.intent('howMany', { |

In the above code, you can see how we’ve encoded the various keywords and phrases that make up the query. This gives the user multiple ways to request the query. The code also parses the variables and stores them in the session. Finally, the photos service is called to search for the keywords.

Utterances

In the above code example, there is a series of encoded text for matching against the user’s text. This is called “utterances”. It defines the different ways a user may ask for an intent to execute. The chatskills library expands the utterances text into a list of different sentences that the user text can be matched against.

For example:

1 | '{to |}{say|speak|tell me} {hi|hello|howdy|hi there|hiya|hi ya|hey|hay|heya}' |

The above utterance expands to:

1 | helloWorld to say hi |

Advantages of a Conversational UI

When is a conversational UI more appropriate than a graphical user interface? We’ve previously discussed the growing popularity in conversational UI. However, beyond mobile and medium, in which cases might this type of interface be more applicable?

It seems obvious that mobile apps, specifically messaging platforms, can benefit from conversational UIs. If the user is already within a messaging system, it’s only natural to perform tasks in the same fashion, rather than clicking a link or icon to access outside windows and interfaces. This is an example of where a conversational UI may work better.

Another potential use for conversational UI is when the series of tasks to be performed are numerous, exist within a crowded UI, or require time-intensive configuration. Complicated action scenarios might typically require the user to select several different UI elements and move between different screens to complete an action. Provided the services are API accessible, a conversational UI may offer an easier solution. With a single sentence, a bot may infer many of the required configuration pieces and complete the action. If additional details are needed, a bot can prompt the user through a hierarchy of questions, guiding them to the desired result.

And Pitfalls

Of course, bots and conversational UIs are not a solution for every software product. A classic historical example of this is Microsoft’s Clippy. One of the top concerns when developing a bot-like interface, is to prevent the act of becoming annoying or intrusive.

It’s important while designing any type of bot or conversational UI, to keep the user’s best interests in mind. Avoid intruding on the user’s work. A bot should only be activated upon explicit request, and when doing so, it should remain unintrusive and possibly even out-of-sight. When keeping this in mind, the conversational UI can become a powerful addition to a user’s computing needs.

About the Author

This article was written by Kory Becker, software developer and architect, skilled in a range of technologies, including web application development, machine learning, artificial intelligence, and data science.

Sponsor Me