Introduction

How do you create an artificial intelligence agent that can navigate a world within a game? Popular methods of AI today, notably machine learning, describe its use in building predictive models, natural language processing, and image classification. However, AI has numerous other applications, specifically of growing importance, aiding computers to navigate a world.

Artificial intelligence has long been used to allow robots to navigate a world using a variety of techniques, such as AI planning, the A* algorithm, and other intelligent heuristic strategies. In addition, branches of artificial intelligence including symbolic AI and logic-based AI can be utilized to deduce information from a given world, in order to produce conclusions about the computer’s current state and what to do next.

As our societal needs continue to expand into the care of computers, robots, and machines, it’s becoming increasingly important to allow computers the ability to understand the world around them and to make informed decisions in order to execute a plan.

A convenient example of how AI can be used to navigate a world is through games.

Exploring AI Through Games

Games provide an ideal environment with which to implement AI agents, provide state, and implement a discrete deterministic world. The AI can reason about its surroundings in order to decide on an action to take to achieve a goal.

In this article, we’ll examine a dungeon-based game, Wumpus World. In this game, the player is tasked with navigating a dungeon of rooms, avoiding obstacles such as pits and monsters, and finding the treasure. The player will be provided with hints, in the form of perceptions, within each room (just as a robot would have “senses” in order to measure its surroundings), and may deduce conclusions about the current state within the dungeon and decide which room to move to next.

Wumpus World is used in multiple examples throughout Artificial Intelligence: A Modern Approach to describe the application of a variety of AI techniques to solving navigation, logical deduction, and intelligent agent behavior within a world.

We’ll explore a web-based version of Wumpus World, developed in HTML, Javascript, and React. We’ll also examine how to implement an AI that can navigate Wumpus World successfully, using techniques including logic-based artificial intelligence, neural networks, machine learning, genetic algorithms, and reinforcement learning.

What is Wumpus World?

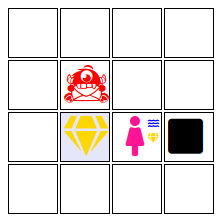

Wumpus World (also called Hunt the Wumpus) is a dungeon-based game. The goal of the game is for the player to traverse the rooms within the dungeon, avoid the enemies, and find the treasure. Within each room, the player is given certain hints, also called perceptions, that indicate what is located within an adjacent room. The player experiences perceptions in the form of sight, smell, and touch. The perceptions that are available include the player feeling a breeze, which indicates that a pit is nearby in a connecting room. If the player enters that room, the game is over. Similarly, the player may smell a stench, indicating that an adjacent room contains the Wumpus. Finally, the player may see a glitter, indicating that the treasure is in an adjacent room.

At each turn, the player must use the perception hints within each room in order to determine which room is safe to move to next. The player may then choose an action to move up, down, left, or right, or to fire an arrow (if they have not yet fired it yet). Diagonal movement is usually not permitted. Additionally, perceptions are only provided for rooms directly adjacent to the player’s current room (not on a diagonal).

Why Wumpus World?

Wumpus World provides a simple simulated virtual environment, with a small subset of senses that you may naturally experience within the real world. By allowing the player or AI agent to experience perceptions of sight, smell, and touch we can demonstrate methods of utilizing these perceptions in order to make deductions about the player’s (or robot’s, in this case) surroundings.

Imagine being able to represent the real world, or a subset of it (such as a factory floor) in a virtual environment with a discrete set of actions. In this manner, a robot can be implemented and trained to optimally perform within the given parameters. Of course, the real world is not discrete, and often results in seemingly random actions and effects. However, we can do our best to represent information to a robot in an orderly and deterministic fashion through a simulated environment.

Wumpus World is used as a demonstration of applying artificial intelligence concepts to a discrete, deterministic, single-player environment. In this environment, the player, robot, or computer agent has the ability to make decisions upon which action to take next in order to achieve the desired goal of finding the treasure and avoiding obstacles.

As the computer navigates the world and learns more about each room, the AI is able to build a knowledge-base of information about the world (the dungeon layout) and each room. As the AI obtains more hints and perceptions within their environment, the knowledge-base can be updated for the adjacent rooms. This allows the AI to change the likelihood of a room containing certain items, obstacles, and intelligently determining optimal paths to take to reach a goal (i.e., the treasure).

You feel a breeze

As an example, consider the case of a room in the middle of a map containing a breeze. The AI can deduce that a pit is located in an adjacent room to their current one, either right, down, left, or up, as shown in the example below.

We could say there is a 25% chance that an adjacent room contains a pit, given this perception.

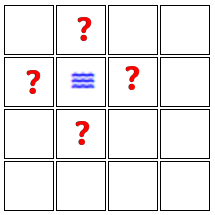

Now, let’s suppose that the player moves up and then right, experiencing yet another breeze, as shown in the example below.

At this point, we know that one of the adjacent rooms contains a pit. Since we’ve already been made aware that the room to the bottom has a 25% of containing a pit from the left-most diagonal adjacent room, we can now deduce there is an increased probability of the room to the south containing a pit (closer to 50%).

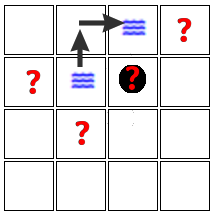

It turns out, in fact, that this would be the correct guess for the particular dungeon board being played. As shown in the image below, there is indeed a pit located to the south of the player, which the player has correctly identified based upon logical deduction.

Notice how in the above example, the player experienced a breeze in one room and by navigating to the adjacent diagonal room to a suspected pit, and experiencing another breeze, the player is able to deduce that the room may indeed contain a pit. Of course, the second breeze could be from a different room, but it still serves to adjust our guess accordingly.

Wumpus World and Hunt the Wumpus allow the exploration of a variety of AI techniques and algorithms. Some of these artificial intelligence algorithms include symbolic AI and logic-based AI for implementing predicate and first-order logic to calculate likelihoods of conditions within each room. Additionally, machine learning techniques such as deep learning, neural networks, and supervised learning can be leveraged to train on a pre-existing data-set of acceptable moves, given certain conditions on the map, and the state of the player. Machine learning can allow the AI to navigate to safe rooms, when a recognized or similar player and map state is experienced. In addition to machine learning, evolutionary computation and genetic algorithms may also be used to train the optimal set of weights for a neural network in order to make the most optimal moves within a given state.

Finally, to make the exploration of AI techniques and strategies within a simulated world as easy as possible to experience, Wumpus World is implemented in the web browser and easily playable by all users.

Let’s take a look at how an artificial intelligence computer player agent can be implemented to navigate Wumpus World.

Artificial Intelligence Strategies

A variety of strategies exist within many different branches of artificial intelligence to successfully navigate the dungeon in Wumpus World. These range from logic-based AI, machine learning with neural networks, reinforcement learning, and even genetic algorithms. We’ll take a look at each of these methodologies. However, let’s start with one of the most intuitive methods for traversing a dungeon filled with obstacles - beginning with logic!

Elementary, My Dear Watson

Sherlock Holmes was famous for using logic to solve a plethora of tricky crime cases, allegedly expressing how “elementary” the process was. In the case of successfully navigating a dungeon filled with peril, we can similarly use logic to reason about our surroundings given the array of perceptions that we experience while moving into each room.

As we’ve described earlier, when a breeze is perceived in a given room, we know that a pit is nearby. Similarly, when you experience a breeze in two nearby locations, you can logically deduce that a pit is more likely to be located within the adjacent room to the corresponding breeze percepts. A player naturally navigating a dungeon-based game in this manner is using a logical-based technique.

Most humans playing similar games will employ logic in order to build a mental model of the map and the associated hazards. They’ll use this model to reason about which rooms are safe to move to and which have yet to be explored, in order to find the treasure with the least number of moves.

A logic-based process is one of the simplest methods for building a knowledge-base and navigating a world, when you have the ability to gain knowledge from each room, in the form of perceptions. It turns out, this same process is contained within a subset of artificial intelligence called logic-based AI, and may be used to develop an automated strategy for implementing an intelligent agent.

Crawling a Dungeon with Logic-Based Artificial Intelligence

Wumpus World has several key traits that make it fitting for a logical-based AI solution. Wumpus World exists as a discrete, deterministic, partially observable, single-agent world. It’s these key dimensions of an artificial intelligence environment that direct us towards specific AI algorithms and techniques for navigating the world. Let’s take a look at each of these traits in order to understand the selection of AI techniques that work best.

A Discrete Snapshot of the Real-World

First, the world in Wumpus World is discrete. This means that the map has a finite number of rooms, actions, and outcomes. Once a game has begun, the size and contents of the world do not change over time. In fact, the size of the map is bounded by specific limits for the number of rooms. Similarly, the number of pits, the wumpus, and the treasure are all limited to a specific number of occurrences. It just so happens that a given room has a 20% chance of containing a pit. The location of the wumpus and treasure are random.

By contrast, a continuous environment is one where the world can change upon any number of effects. A convenient example is a multiplayer world, where the actions of other players or even the developers can alter the size and contents of the world, with unknown effect to the player. Perhaps, as the player moves across the map, more land and areas are revealed in real-time (similr to MUD or MMORPG games).

Since Wumpus World is discrete, it allows for reasoning via knowledge-base that will be unchanged through the course of the game.

A Perfect Deterministic Environment

The second key trait for Wumpus World is that the game is deterministic. This means that any action has a known and expected outcome, given the current state of the game. If the player chooses to move east, they will indeed move to the room to the east. If a player perceives a breeze, there is indeed an adjacent room that contains a pit. The complete list of available actions, percepts, and rules of the game are known prior to even beginning the game. There are no random chances, random outcomes, or uncertainty in player actions.

By contrast, a stochastic environment is one where random effects may take place. This more accurately describes the real-world (or typical battles in a role-playing RPG game), where any number of seemingly random effects may change the current environment in unexpected ways.

Seeing Through the Fog-of-war

Wumpus World is partially observable. This means that while the dungeon contains a known number of rooms, width, and height, the player only has knowledge of the rooms that they have already visited. The rest of the map is unknown and the contents of those rooms are not visible to the player.

This contrasts with a fully observable world, where all rooms, tiles, moves, etc are known to all players in the game. For example, chess, checkers, and backgammon are fully observable games, since both players’ moves may be observed and recorded. When a game is fully observable, different AI strategies may be employed (such as the Minimax algorithm for determining the next best move and other brute-force strategies).

We’re All Alone in This World

Finally, Wumpus World is a single-agent environment. The game is not affected nor playable by simultaneous players, nor is the player in competition to score higher than another player in order to win the game.

As the player is only in competition against oneself, algorithms that take into account limiting actions by the other player (brute-force tree exploration strategies) or minimizing their score (the Minimax algorithm) are not likely to be used. Instead, an AI only needs to focus on the optimal goal for the single player, which in this case, is to avoid the obstacles and find the treasure.

What Comes Naturally is Logic

A simple AI strategy for navigating the map of Wumpus World is to use logic-based artificial intelligence. This comes naturally for many players. At each step in the game, you examine any precepts discovered about adjacent rooms and build a knowledge-base of the game state. Using this database of knowledge, you determine the probability that a given room is dangerous or safe.

Logic-based AI can be implemented in a manual strategy by encoding certain conditions to check for within your AI’s code. For example, you may create a rule for your AI to only move to rooms where no breeze has been experienced in adjacent rooms. This is a logical rule, as it ensures that a room is safe - at least, to a known degree of having visited the adjacent rooms first.

Logic-based artificial intelligence rules can be encoded in a more algorithmic approach by using first-order logic. This allows us to write rules, using functions and variables, in order to create logical sentences to instruct our AI on how to behave.

Let’s take a look at building a rule to check if a room is safe.

In Wumpus World, a room is deemed safe if it does not contain a pit nor a wumpus. Let’s declare the following variables, as shown below.

1 | Rules: |

With the above variable definitions, we can begin to describe rules that declare a room as containing a pit, a wumpus, treasure, or being safe.

1 | 1. Adj(R)^B(R) => P(R) |

In the above example, the first rule says if R is adjacent to the current room and a breeze is experienced in the current room, then a possibility exists of the adjacent room containing a pit.

Likewise, the second rule says that if R is adjacent to the current room and a stench is experienced in the current room, then a possibility exists of the adjacent room containing a wumpus. We follow the same logic for detecting a treasure.

Finally, the fourth rule says that if no pit exists in a given room and no wumpus exists in a given room, then the room must be safe.

Using these rules, we can now determine whether a room is safe. Let’s take a look at a complete example.

Note, for the sake of keeping the formulas simple in this article, we’ve omitted details at looking at all adjacent rooms to a target room in order to denote it as being safe. That is, if there is a breeze in any adjacent room, then the current room may contain a pit. A more precise description of this rule, using E for exists, might be: E(Adj(R1, R2) ^ B(R1)) => P(R2)

Example: Is R(2,1) safe?

In order to determine whether the room at X=2, Y=1 is safe, we need to satisfy a logical statement, determining if R(2,1) is O (OK).

Satisfy: O(R21) = True

Let’s expand on the first-order statement by breaking it down into its inner components. For the R21 to be OK, we know that we need to satisfy rule 4 from our list above. That is, O(R) is true if there is no pit and no wumpus in the room. Therefore, we can express this statement as shown below in rule 5.

1 | 5. !P(R21)^!W(R21) => O(R21) |

Rule 5 states that R21 = OK is implied if no pit exists in the room and no wumpus exists in the room. We built this using rule 4 above. Next, we need to expand the clauses for a room containing a pit and a wumpus and we should be able to fully evaluate our logical statement and reach a conclusion.

1 | !(Adj(R)^B(R)) ^ !(Adj(R)^S(R)) => O(R21) |

In the above expansion, we’ve separated out rules 1 and 2, while keeping them negated, in order to determine that R21 is safe. At this point, we can execute basic conditional checks on the current game state to evaluate whether the adjacent room contains a breeze and/or stench, in order to satisfy the implied conclusion of the Room at X=2, Y=1 being safe (O(R21)).

Note, a more precise rule to describe a room as being safe (checking all adjacent rooms for a breeze) using E for exists, would expand to: !(E(Adj(R, R21) ^ B(R))) ^ !(E(Adj(R, R21) ^ S(R))) => O(R21)

While the above logic is a formal definition of how logical-based AI can be used in Wumpus World, you’re probably most likely programming these rules in your AI naturally, using basic conditional logic.

Logic-based AI Has Its Limitations

Logic-based AI requires determining all conditions and calculations at the very beginning for a specific world. Any logical condition that is not accounted for will not be handled by the computer player. For this reason, logical AI requires a discrete environment, where a finite list of possible conditions exist from which rules may be created.

When the world is too large and the rules become too complex, other AI strategies may prove more successful and simpler to implement.

Let’s take a look at how machine learning can be used, specifically using supervised learning to train a computer AI agent to navigate the world.

Giving the AI Some Brains

Rather than attempting to create a logical rule and associated set of conditionals for every possible case within an environment, we could instead train a computer on a subset of those conditions with the goal of the AI generalizing on the training data. Once trained, the AI should be capable of making informed decisions about never before seen states within the environment, that match similar conditions to those within the training data. This is the idea behind supervised learning.

Serving as a Teacher

Supervised learning requires creating a training set and a test set of data. The data consists of example cases from the world, such as describing specific conditions and states, along with corresponding moves that would be the most optimal most to take under each condition.

As an example, we might describe a single training case as the current position of the player, the number of adjacent rooms, whether a breeze, stench, or glitter is perceived, and an optimal move of right, down, left, or up for the specific case.

Using the above example training case, we could build out a larger list of potential scenarios, each with their own corresponding best move to take under the circumstances. If a room contains a breeze, but the prior room to the south did not, perhaps the AI should move back to the room to the south.

By combining each potential state in our training set with a desired action from the AI (move right, down, left, up) we are essentially classifying each training set case as a specific movement.

For example, if we represent the four movement directions as 0-4 and each state condition in the world as a 0,1 (for simplification purposes), we might have rows in our training set that appear as shown below.

1 | 0 = up |

1 | 1,0,0,0,1,1 => 0 |

Unlike logic-based AI, where we tried to create a rule for every possible condition and state in the world, with a neural network we only require a subset of those cases. By giving the AI enough varying training data to learn from, it should be able to generalize across many new scenarios and states.

Once the AI has been trained to a desired degree of accuracy on the training set, the test set (which contains new scenarios, not yet seen by the AI) can be checked to see how well the neural network performs.

Note, a neural network isn’t the only machine learning algorithm that can be for classification. We could also use logistic regression, a support vector machine, knn, or any other statistical-based classification algorithm. The idea is that we’re classifying each case within the dungeon state as a specific move for the player to take.

Taking Our Class to the Real World

Let’s try our supervised learning classification on a real example from Wumpus World, at the starting state of the game. When the player first enters the dungeon, they will be located in the bottom-left corner of the game. Let’s see what a data-set might look like for all possible conditions in the starting room, along with the associated move that the player should make. We’ll then split the data-set into a training and test set to see how well the AI would perform.

Training a Neural Network with Supervised Learning

Let’s start off with a simple training data-set, built from the starting position on the Wumpus World dungeon map and using various samplings of positions across the map. The idea is to capture a handful of different possible states on the map and allow our machine learning model to learn the best move to make in those positions. We’ll provide the optimal move in our training data for the AI to learn from, with the hope that the AI can generalize to states on the map never seen before.

Below is a list of the features included in the training data.

1 | room_n, room_e, room_s, room_w, breeze, stench, glitter, move |

Each room can hold a value of unvisited, visited, wall.

Each precept is a boolean of true or false.

The y-value for move is a direction of north, east, south, west.

An example of some data for training an AI to play Wumpus World based on our training csv data is included below.

1 | room_n,room_e,room_s,room_w,breeze,stench,glitter,move |

As an example, let’s take a quick look at the first row. The first row holds an example state where the player is located in the bottom-left corner of the dungeon (the starting position). There is a wall indicated to the player’s south and west, along with two unvisited rooms, one to the north and one to the east. There are no precepts, therefore no hazards to be concerned about. In this case, an optimal move would be to either of the unvisited rooms. The AI could choose to go north or east. However, we arbitrarily pick north and let the AI train with this.

Training Machine Learning Models for Wumpus World

Let’s try training a handful of different machine learning algorithms on our training data and run the model against a training and test set to determine how well our data does in predicting the optimal move from each example position in the game.

Our data-set includes a total of 68 rows (corresponding to 68 samples of positions and states in the game). Note, this is a very limited subset of total possible positions, but the idea is to see how well the AI can do with such a limited source of data.

We’ll start by using R to build a model from the training data. The complete code for this example can be found here.

First, we’ll define a helper function for setting the random seed so that the results can be reproduced.

1 | library(caret) |

We can now set the training control for the caret package so that our machine learning models can be reproduced from the training data.

1 | seed <- 1002 |

To reproduce the same results as this article, use the same random seed as shown above (1002).

Next, we read the csv data and create a training and test set, consisting of 70% training rows and 30% test rows.

1 | # Split the data into a training/test set by 70% training/30% test. |

Finally, we can build the models and check the results. As the caret library provides a single format for training models, an example of the code used to train one of the models is shown below. All other models trained use the same lines of code.

1 | fit <- train(move ~ ., data = training, method = 'svmRadial', trControl = myControl) |

A selection of AI machine learning models and their associated accuracy performance is included below.

Support Vector Machine

Correct: 6/19 = 32%

Multinomial Logistic Regression

Correct: 8/19 = 42%

K-nearest-neighbors

Correct: 10/19 = 53%

Random Forest

Correct: 16/19 = 84%

A random forest model produces the highest accuracy on the training data when checking against the test data, with an accuracy of 84%. We can further see details in the results with a confusion matrix as shown below.

1 | confusionMatrix(results, test$move) |

1 | Confusion Matrix and Statistics |

Not bad! Let’s finish off the training using the random forest model by training against the entire data-set and then we can try running the AI in the game.

1 | # Finally, train a model on the entire data-set so we can play the game: Correct: 67/68 = 99% |

The results of training on the entire data-set give us 99% accuracy.

Does It Actually Work?

What we’ve really been waiting for is to find out if a machine learning model actually works in playing Wumpus World. Let’s find out!

Using the best model that we’ve found with a random forest, we’ll start a new game of Wumpus World and feed each state of the game to our model, asking the AI to determine the best move to make.

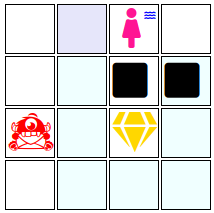

Here is an actual run of the game and the corresponding moves played by the AI.

1 | > custom <- data.frame(room_n='unvisited', room_e='unvisited', room_s='wall', room_w='wall', breeze='false', stench='false', glitter='false') |

Let’s analyze the above states and each move. First, the player begins in the initial position in Wumpus World, in the bottom-left corner of the map. There is a wall to the south and to the west. The only valid moves are north or east.

The AI chooses to move north.

We’ve now moved up a room. There is still a wall to the west. However, now we also have rooms available to the north, east, and south. Of course, the room to the south is where we just came from, so it is marked as visited. We’ve also experienced our first precept of breeze. This means a hazard is nearby in an adjacent room. Since a pit could be located in either of the unvisited rooms to the north or east, the AI chooses the only logical option in this case, which is to move back to the room we can from, which we know for certain is safe.

The AI chooses to move south.

We’re now back in the room that we came from. This is exactly like the starting state, except that now the room to the north is marked as visited. We know that the room to the north also contains a breeze, so if we can, we should go to a different room next. Indeed, the AI agrees.

The AI chooses to move east.

In the last move, we’re in a previously unvisited room along the bottom row, with a wall to the south, and rooms available in all other directions. Of course, the room to the west has already been visited. Since we have no hints of danger, we are free to safely move north or east.

The AI chooses to move north.

Is the AI Just Wandering Around?

Given the data-set that we’ve trained upon, it may indeed appear that AI is wandering around. However, keep in mind, with the limited features contained within the data-set, the AI is in fact avoiding obstacles and attempting to prioritize visiting previously unvisited rooms. The exception is that if a room contains a breeze or stench, the AI will always avoid moving to an unvisited room and instead move to a previously visited room that it knows is safe.

If the AI were to always perform in the above trained manner, it’s likely that it would be unsuccessful in always locating the treasure. Further, it would likely end up in a repeating loop - avoiding obstacles and moving back to safe locations. Wumpus World, by design, generates maps randomly at the beginning of a game, creating scenarios where the player must risk moving to an unvisited room even when a breeze or stench is detected, taking a risk, in order to press forward and locate the treasure.

Forgetting What We’ve Already Seen

It’s clear that our training data is missing short and long term memory information to make the above strategy of risk more effective. Specifically, the AI can take into account when it perceives a breeze, stench, or glitter, in order to backtrack to a room with a glitter in order to hone in on the treasure.

Consider this example. The AI enters a room with a glitter. Based upon the training data, the AI decides to move into an unvisited room. No glitter is seen in this room. Our training data will not allow the AI to move back into the previously visited room, containing a glitter, since that room was already visited and a new unvisited room exists (which is prioritized higher in the training data). What we actually want to happen, is for the AI to move back into the previous room with the glitter, and then move to the other unvisited room to locate the treasure. However, our training data just doesn’t include a feature for this case.

In order to give the AI some form of short term memory about previous rooms, we would need to expand the feature set contained within our training data to include more information about adjacent rooms, in addition to the current room. Rather than only recording if a room is visited or not, perhaps we should also record whether a room contained a glitter. This would give the training data a way to express moving back to the room with a glitter and then proceed.

Playing Like a Pro

Expanding upon the idea of growing our data-set to include more features, the key behind most successful machine learning models is not just the quality of the data, but the amount.

In our example above, we’ve only trained the AI model of a handful of selected samples of game play. However, if we were to provide a much larger volume of samples, the AI may be able to perform more effectively across a larger number of differing states within the game.

While the initial data-set was created manually, it is often more effective to record game-plays by an expert or professional, while the game is actually running. In this manner, each move that the expert player makes in the game can be recorded and saved to the data-set. Provided the player typically plays an optimal game, these records can expand upon the optimal moves to make at each given state in the game, offering the AI vast amounts more of training data to base its machine learning model upon.

The web-based game of Wumpus World described in this article contains an expadable knowledge-base panel in the web page UI. This knowledge-base records probability of items within each room, along with the number of visits to each room. A supervised learning algorithm using a data-set that is composed of snapshots of this knowledge-base at each move (or subsets thereof), may prove more effective in capturing intelligent player strategies.

Too Much Data and Too Little Time

The more feature data that we can provide to the training data, the better the model that we can hope to train. However, as Wumpus World expands in size, the number of possible states continues to become exponential. In this manner, preparing a training set csv file for even a subset of cases can prove challenging.

For this reason, it may be better to move beyond supervised learning with a training set, into the realm of unsupervised learning.

Training a Neural Network with a Genetic Algorithm

As we’ve seen earlier, supervised learning can indeed be used to train an AI machine learning model on which optimal moves to make under specific conditions and states within the game. However, the complexity of the game expands exponentially with the width and height of the map, not to mention potential states within the rooms. This can lead to an unrealistically large training data-set of possible states in the game.

Rather than using supervised learning with a training set where we specifically indicate the optimal move to make at each game state , perhaps we could allow the AI to figure out the optimal move itself. This is the key behind unsupervised learning.

Growing Up As a Baby

We can implement unsupervised learning using a machine learning model of a neural network and allow the AI to train itself in order to determine the best possible move to make at each position and state in Wumpus World.

Recall, previously we’ve used several different supervised learning classification machine learning algorithms to predict the optimal move. However, let’s take away the need to specify the optimal move - or even to provide a training set at all.

Instead, we’ll use a neural network and let the AI figure it out!

A neural network contains a series of nodes. Each node is connected to additional nodes via weights. The weights are changed at each iteration in training in order to reduce the error rate detected.

One method for implementing unsupervised learning with a neural network is by using a genetic algorithm.

Evolving the Best Brain

We can create a neural network of varying depth (deep learning would work here as well). Rather than using backwards propagation to train the neural network, we’ll instead assign the weights from a randomly generated array of floating point values. We’ll then gradually alter the weights with a genetic algorithm.

A genetic algorithm requires a fitness function in order to determine how well a particular genome performs. By allowing the AI to actually play out a complete game of Wumpus World, we can assign a score from the game, according to how many moves it took to locate the treasure (or a penalty for losing the game). The process works as follows.

Training a Neural Network with a Genetic Algorithm

- Generate 100 random arrays of floating point values, of length equalling the number of weights in our neural network.

- Assign the floating point values from each genome to a corresponding neural network (one gene per weight in the network).

- Assign the neural network to the AI and allow it to play the game.

- Assign the score from the game as the value for the fitness function for that genome.

- Evolve the genomes using roulette selection, crossover, and mutation to slightly alter the genes and improve the next generation (selecting the best performing neural networks to move on).

- Repeat steps 1-6 until a desired score is found.

Another key to the above idea of using a genetic algorithm to train a neural network is that we need to design the neural network to appropriately take as input, the necessary features about the game state (just like our supervised learning training data-set). We also need to design our output accordingly, to indicate the desired move to make. In this manner, the number of input nodes for our neural network could be quite large. The number of output nodes may be as few as four (one for each direction, with the highest probability value being the direction to select).

Navigate the World with Bayesian Probability

So far we’ve discussed using logic-based artificial intelligence AI techniques and a machine learning model using both supervised and unsupervised learning with genetic algorithms to play Wumpus World. While these techniques prove successful to a degree, we’ve identified key weaknesses behind the approaches. One weakness is the lack of longer-term memory when deciding on a move to make. It may often be the case that the player needs to backtrack to a previous room in order to avoid a series of obstacles, such as a line of pits.

Even with our logic-based AI, where we can account for nearly every potential state in the game (as the game is discrete and deterministic), there is still a potential issue with the granularity of our knowledge-base.

Recall, with our logical-AI approach (and to a degree, with our machine learning models) we’re recording limited information about each room. Specifically, we’re recording if a room contains a precept (breeze, stench, glitter), and adjusting our knowledge-base accordingly for the room. If we’ve detected a breeze from two adjacent locations to a room, we have a higher probability that the target room contains a pit.

However, just how likely is it that the room actually contains a pit?

The Room “Probably” Has a Pit

While applying a numeric factor to the knowledge-base for a room, after experiencing certain hints from the adjacent rooms, can give the AI an idea of how likely it is that the room is dangerous, we can make this process more formal.

By using bayesian probability and applying it to the calculations on each room’s contents, we can obtain a more granular view and decision on whether a room is safe to move to.

Bayesian probability combines prior assumptions along with the current state of the game, in order to calculate an exact probability for the room actually containing the obstacle or item in question.

There are a variety of implementations and discussion topics of bayesian logic for Wumpus World available for review for more detail.

Navigate the World with Reinforcement Learning

Designing a game playing AI agent isn’t complete without a discussion on reinforcement learning. Many AI implementations for games are based upon the key artificial intelligence technique reinforcement learning.

Reinforcement learning uses the idea of providing a reward value for each successful move or complete game play, in order to allow the AI to improve over time. The more plays that the AI partakes in, the better the results in game-play.

While our genetic algorithm technique for training a neural network is one form of reinforcement learning, in that we gradually improve each genome according to the fitness function after game-play, a more typical strategy involves the use of Q-learning.

Using Q-Learning

Q-learning is a type of reinforcement learning in artificial intelligence that is able to improve over-time, based upon a quality score at each successive play. The algorithm learns the quality of decisions, based upon state and actions taken.

Q-learning is based upon the Markov decision process, which provides a concrete mathematical technique for making a decision based on outcomes that are partly random. Q-learning is able to optimize the markov decision process to find an optimal policy that maximizes the game score, and therefore, excels at playing the best game.

The “Q” in Q-learning stands for the reward that is provided at each iteration of training. A simple implementation is a reward of +1 if the player wins the game with a higher score or -1 if the player ends up with a lower score than the previous one.

Let’s Play a Game: Hotdog

Q-learning can be better understood with an example. Let’s take a look at a simple game called, Hotdog. We can examine how Q-learning is implemented within this basic game, in order to expand upon how this could be used in other games, such as Wumpus World.

Consider our example text-based game, “Hotdog”, inspired by the classic arcade game, Burger Time.

In the simplified version of the game, we have a fully-observable 2-D array of spaces where the player can move in a line, left or right. There is an enemy hotdog (yes, it’s a hotdog, see the game description for details!) and a treasure. The player can move left or right any number of spaces to reach the gold and increase their score +1. If they hit the hotdog, they receive a penalty -1, lose the game, and start over.

Since the game is text-based, the rooms are simply represented as a series of dots, as shown below.

Symbols Used in the Hotdog Game

1 | . = room |

For example, one display of the game may appear as shown below.

1 | ....G....P..H.. |

In the above, the player is located towards the middle right of the game board. The gold is located to the left of the player. The hotdog is located to the right. If the player were to move towards the right, they would collide with the enemy and lose the game. If the player moves to the left, they can reach the gold and win this round of the game.

Learning to Use Sight

The hotdog game is extremely simple. In fact, the game board is fully viewable by the player, so that the player can see if the gold is to the left or right and move in that direction. A human playing this game would have no problem winning - simply move in the direction of where the gold is (unless the hotdog is sitting in front of the gold, blocking the player, in which case that game-play is an automatic loss).

However, consider a completely random AI playing the game. Without using an informed decision from detecting (via sight) if the gold is to the left or the right of the player, and just moving randomly, the AI will perform poorly in reaching the gold. In fact, the AI is even provided with the coordinates of the player and the treasure at each round (just as the player would be able to see), and yet still fails to perform.

This can be demonstrated more clearly in the game recordings below.

AI with no training

AI with 1,000 points of training

A random AI plays extremely poorly, simply choosing to move left or right with no informed decision process at all. Naturally, the AI obtains a poor score, since it frequently hits the hotdog just as often as the gold, or sometimes never reaches any item at all (and times out)!

By contrast, after 1,000 points worth of training the AI with Q-learning and a reward system, you can immediately tell how much more effective the AI is at playing the game. It nearly always moves in the correct direction to locate the gold, followed by moving towards the hotdog to end the game - only after obtaining the gold (the AI is intentionally ending the game in this manner to avoid infinite game-play loops and optimizing the number of steps to complete a game-play)!

Keep in mind, we’ve never actually told the AI how to play the game nor even provided the rules - it figured this out on its own based upon the game state.

Q-learning trains an AI agent to perform exceedingly well at playing the game, comparable to the successful game-play by a human (who can fully see the game board and move in the correct direction).

The AI trained with Q-learning has effectively learned how to “see” the game board, just like a human with eyes. However, we never actually taught the computer anything about visibility - it learned this on its own, based upon the position of the player and the gold, determining which direction resulted in a higher score for each situation.

This same process can be applied to Wumpus World for the varying game states in order to optimize the AI’s moves during game-play.

You may have realized that we did not provide the position of the hotdog to the player as part of the state for the AI. It turns out that due to the simple nature of the game Hotdog, the position of the enemy is not needed. The AI simply learns to move left or right towards the gold, regardless of the position of the hotdog. If the hotdog is blocking the gold, the player has no choice in the matter. On the other hand, if we provided the player an additional action, such as a salt shaker to attack the hotdog, the AI could learn an additional strategy for winning the game.

Conclusion

In this article, we’ve reviewed several techniques for designing an artificial intelligence AI agent player for the game Wumpus World. We’ve discussed implementations using logic-based artificial intelligence where we’ve identified all possible states in the game and provided rules accordingly. We’ve also discussed machine learning model implementations, using both supervised learning with a recorded csv file training data-set, and unsupervised learning where we trained a neural network using a genetic algorithm. We’ve also reviewed an implementation of Q-learning, where the AI agent learned how to “see” the game board and identify how to move towards the gold, even when it was never actually given a rule to move towards the gold.

Artificial intelligence provides a large number of powerful algorithms and techniques for building game-playing agents. However, games aren’t the limit of an AI agent.

Games provide a convenient environment with which to demonstrate rules and effects in a given discrete world. By training an AI within a game environment, it could potentially be utilized within the real-world in a controlled manner. Games and simulations are often used when training AI algorithms prior to running them in real-world scenarios. However, it is still important to keep in mind the differences between the perfect discrete and deterministic environment of a game, compared to the often random effects within the real-world.

About the Author

This article was written by Kory Becker, software developer and architect, skilled in a range of technologies, including web application development, artificial intelligence, machine learning, and data science.

Sponsor Me