Introduction

Can computer software be designed to be more emotional? Imagine the idea of conversing with your computer, perhaps checking the weather. The weather appears to be cold and rainy for the early part of the day. This naturally brings a certain feeling of negativity, perhaps even dread, to most people. Typical computer programs of today will simply report the weather and prompt for the next query, without giving the user’s disposition a single thought. This develops little to no empathy with the user, and could even result in a negative association with the software. This is of particular impact to conversational UI, which relies on successful and repeated human interaction in order to produce results.

What if a computer program using artificial intelligence, instead, responded with a more positive outlook. Perhaps, the software could mention that, while it’s currently raining outside, it will warm up with clear skies, later this afternoon. This could be done without the user specifically asking about the weather later in the day. Imagine the effect this could have on the individual using the software. Would this motivate the user to interact with the computer more? A computer that can form a more intimate connection with the user could very well end up stimulating increased usage of its software, ultimately resulting in higher demand and productivity, not to mention, increased profits for the company that develops it!

In this article, we’re going to build a proof-of-concept program, capable of simulating emotion in software, also known as affective computing. We’ll explore the methods for sentiment analysis within human responses using artificial intelligence, measuring and reacting to conversational emotions such as love and hate, and developing longer relationships between human and computer. Does this seem far-fetched? Let’s give it a try!

The Software Of Today

The conversational user interface has long existed as a natural language exchange between humans and computers. It’s been in use for almost as long as the computer terminal has existed. Allowing users to communicate with computer software by text chat or voice is often considered to be the ultimate level of connectivity between humans and machine.

Early forms of terminal programs allowed interaction with the user through a simple command prompt console. These are still found today in many text-based interfaces, including the Windows, Mac, or Linux command prompt, as well as databases, and a variety of other software. These typically involve the user entering short and specific commands to execute code and receive text responses from the software. Communication is often cryptic and difficult to read. It is also common to require multiple commands, in a sequence, to complete a particular task.

From the Console to Conversational UI

It’s not too difficult to see how traditional command-line text interfaces could be improved with conversational user interfaces. What might normally take five sequences of commands and responses to complete a task, could be achieved with a single sentence, communicated to the software. The response could be far more human-friendly and even cater itself towards additional tasks that suit the purpose.

Advancing from command-line text interfaces to voice-based software, makes it even easier to see the power behind conversational user interfaces. With access to thousands of APIs readily available over the Internet, software can easily tap into web services to achieve far more power than ever before. When this is combined with voice-based conversational UI and advanced accuracy levels of speech recognition, it brings an entirely new level of interaction between the computer and user. Pointing and clicking will develop into complete conversations, with a growing feeling of the computer actually working “with” the user, rather than “for” the user.

Naturally, this lends itself more easily to adapting emotion into the conversations, in an effort to make the software interaction even more human-like.

So, how exactly can we build emotional software? Let’s take a look at what is required.

A Design for Emotional Software

Creating artificially intelligent emotion within software boils down to two core concepts. First, it requires recognizing the emotion of the user. Second, it requires responding with emotion to the user.

A basic table expressing these tasks, along with potential solutions, is shown below.

| # | Core Concepts of Emotional Software | Solution |

|---|---|---|

| 1 | Recognize emotion | Sentiment analysis |

| 2 | Respond with emotion | State machine transition |

Recognizing Emotion

The first requirement, recognition, can be based upon a determination of sentiment analysis within the user’s phrasing. If a phrase contains negative association keywords, it’s a good guess that the user is expressing negative emotional sentiment or frustration. Likewise, positive keywords, could indicate a favorable disposition of the user. By making this determination, the software can trigger the associated state for positive or negative emotional interaction.

Emotional Response

This leads is to the second requirement, which is an emotional response. Once the sentiment of the user’s phrase has been determined, the software needs to formulate a response, including emotional phrasing where applicable, or standard phrasing for other cases.

Since there can be variety of acceptable computer responses to a particular query, at least two (neutral, positive, and likely more) responses would be required for a query in a given state of sentiment.

Software Emotion Requirements

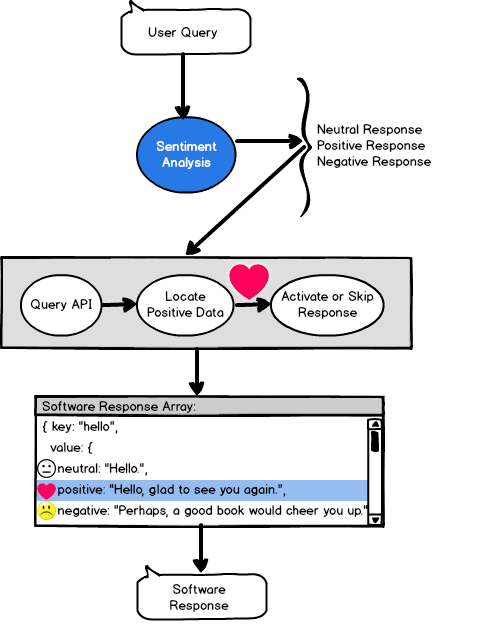

A simple diagram of including emotion within a conversational UI is included below. Notice, how the software’s conversation consists of an array of possible responses to a query. Based on sentiment, the software may alter its response by first, proactively fetching additional data, and then responding to the user if the data is deemed favorable. This effect is created by transitioning a state machine across neutral, positive, and negative sentiment.

1 | [User Query] -> |

Each response to a user’s query consists of an array of sentences, and even actions. These additional actions can trigger depending on sentiment states, with additional data being fetched on behalf of the user, and only announced back to the user if deemed favorable. Of course, the user can still specifically ask for this data, but the key point is having the software empathize with the user, offering a way to “cheer up” the conversation with positive data points.

An Example of Software Emotion: Stock Charts

Let’s consider an example case of a user that has requested a stock chart for the day. The figures look disappointing. The user might speak the phrase, “I’m really unhappy with these financial figures.” or “Oh, just great.”. The software can contain three possible responses for the current state, as shown below:

User Query: “I’m really unhappy with these financial figures.”

Emotional Sentiment: Negative

Software State: Stock price has been supplied to user.

Available Responses:

“Please say a ticker symbol.”

“Although numbers are down, the 30-day moving average is well within positive territory.”

“The numbers are indeed positive, should we review the volume as well?”

Considering the above example, the software has determined a negative sentiment from the user, as a result of a loss on a stock purchase. A typical response to a conversational UI might be to simply prompt the user to ask about another stock symbol. However, once sentiment has been determined, the software could proactively fetch the 30-day moving average for the stock, and if positive, let the user know about this favorable news. If the additional metric turns out to be negative, the software could search for yet another metric (60-day moving average, volume, YTD gain, etc.), until a positive metric is found or the search is exhausted. Likewise, if a user expresses overtly positive emotion to a stock price, perhaps the software could proactively ask to check the stock volume as well.

Given the above, it’s clear that sentiment plays an important part in formulating an emotional response. Let’s take a look at this in detail.

Preventing Frustration in Conversational UI

The first requirement for measuring emotion within a conversational user interface, is to detect the positivity or negativity in a given conversation. To be specific, we’re looking at the conversation between a human and computer. Short and simple typed commands have been replaced with flowing sentences in this context. As such, the user is conversing with the software, while performing tasks for a certain goal.

If the user speaks an inaudible or invalid phrase, it might be typical for the computer to respond with a “catch-all” phrase, asking the user to repeat the question or provide a list of options. This can be unnecessary and may even invoke frustration from the user. It might be more advantageous to detect the frustration ahead of time, and offer the user alternatives for completing the task.

To detect frustration from the user, we can utilize sentiment analysis. Specifically, we can try to measure the degree of positivity within a sentence and track this level of emotion throughout the conversation. If the level falls below a certain threshold, the software could take corrective action to assist the user in a friendly manner, or at the very least, show empathy.

An Example of Software Emotion: Music Player 1.0

Below is an example of a more traditional interaction with software. Notice how the user expresses frustration after the computer plays the wrong song. However, the computer doesn’t recognize the command and simply fails to reply.

1 | Hello, what song would you like to play? |

In the above conversation, the computer ceases to reply to the negatively charged spoken phrase, simply because none of the terms match any of the program’s key phrases. We’re lucky, in this case, that the user tries again and re-issues a new query in a different form. For some users, we might not get so lucky, in which case the user simply quits the software and gives up. The computer recognizes the new phrase, advancing its internal state (to determine a specific song), and asks the user to name the song to play.

An Example of Software Emotion: Music Player 2.0

Imagine if the conversation were changed, as follows:

1 | Hello, what song would you like to play? |

In the above conversation, we can see a much more flowing interaction. In fact, the computer is even showing empathy when the user first expresses frustration. By reacting in a more human-like fashion, the user could be put at ease, and perhaps even find it more enjoyable working with the software.

In addition, notice how the computer has tracked the context of the conversation. After the user issues a request to play a song, the conversational UI sets its context to “playing a song”. Although, it’s playing the wrong song (from Green Day instead of Britney Spears), the context of “playing a song” is still valid. When the user then issues the negatively charged response, “No, I said Britney Spears”, the software understands that the user wants to play a song (that’s our current state) and updates the context subject from “Green Day” to “Britney Spears”. Now, the computer can ask which song, since it already knows the artist and task, allowing the user to simply state the song title.

Conversational UI State Transition

We can dig into the state machine transition for the example scenario above. The software state might appear, as follows:

1 | { sentiment: neutral, action: null, subject: null, song: null } |

In the above state flow, notice how the internal variables for the software transition according to the triggering of sentiment. Initially, the internal state was set to playing a song by a particular artist. When the user indicates that the artist was interpreted incorrectly (through a failure of voice recognition), the negative sentiment is detected and results in a transition of the subject. In addition, the song value is set to null. The software now asks the user to provide a value for the song, at which point, the state transition may now be completed.

Now that we’ve seen how important sentiment analysis is, for determining the state of a conversation between human and computer, let’s dig into the details of calculating sentiment.

Measuring Positivity with Sentiment Analysis

It’s clear that there are certain keywords and phrases that evoke negativity or frustration. If we can key off of these phrases, we can detect a change in emotion from the user and respond more appropriately to help guide them towards completing a task.

One method for measuring conversational emotion is through artificial intelligence and natural language processing, with machine learning and sentiment analysis.

There are a variety of different methods for sentiment analysis, each with differing degrees of accuracy. Basic techniques can include simple keyword searches, such as using the AFINN word list or other dictionary-based algorithms. Artificial intelligence based techniques include trained sentiment analysis, and often result in higher accuracies than their non-AI counterparts.

∗ Note, we’re about to get into some nitty-gritty data science stuff! Feel free to skip ahead to the demo, if you prefer!

Sentiment Analysis: Keyword-Based vs Machine Learning

As an example of the difference between keyword-based sentiment analysis and AI machine learning models, we can take a look at some simple accuracy tests on a large Twitter dataset.

The dataset contains 1,600,000 records of tweets that were recorded over a given time. Each tweet was automatically scored as having positive or negative emotion by detecting the happy :) or sad :( emoticons within the body of the tweet.

Below is a table showing sentiment analysis accuracy measurements using differing algorithms.

| Algorithm | Accuracy |

|---|---|

| nrc * | 58% |

| Syuzhet * | 63% |

| Bing * | 63% |

| AFINN * | 65% |

| Logistic Regression | 67% |

| XGBoost | 67% |

| SVM | 80% |

Accuracy in sentiment analysis can be difficult to get just right. Standard keyword-based methods produce lower accuracy results, as they only take into account a canned list of emotionally charged terms. In addition, non-machine learning based algorithms can not increase accuracy by learning additional traits from a training set. By contrast, machine learning algorithms are capable of identifying more features in larger datasets, increasing the accuracy over larger volumes of data.

Sentiment Analysis Using AFINN

Let’s see how the AFINN word list scores on accuracy for sentiment analysis. AFINN is an English word list that associates a score between -5 and +5 for differing emotional terms. The terms were manually labeled.

We can use R to load the Twitter dataset and process a small subset of the data for accuracy scoring of sentiment analysis.

First, we’ll use the following R packages:

1 | packages <- c('syuzhet', 'data.table', 'caTools', 'slam', 'tm', 'SnowballC', 'randomForest', 'xgboost') |

Next, we can use the following code to read the Twitter dataset for sentiment analysis and accuracy measurements.

1 | # Read the dataset. |

When we run the above code, we find AFINN scoring 64.64% accuracy for sentiment analysis against the Twitter dataset. This is certainly better than random chance, but it can likely be improved upon.

Sentiment Analysis Using Machine Learning

We can also check the sentiment analysis accuracy of the Twitter dataset using a machine learning AI approach. To do this, we’ll first build a corpus of the terms within the dataset. This effectively becomes our dictionary of terms. We then strip down the terms by removing punctuation, stopwords, numbers, and use stemming to make variations of the same word common. Finally, we narrow down the dictionary, using sparse terms, so that we only keep terms that appear more frequently within all of the documents. This helps speed up the training time of the machine learning algorithms.

Our end result is a document term matrix, consisting of columns for each term in our corpus, and rows for each document. The value in each cell corresponds to the frequency of the term appearing in the document. The document term matrix is a useful way to encode text for a variety of natural language processing tasks, such as trending topics, classifying documents, searching by keyword, or even detecting a hacked tweet.

Below is an example of building a corpus.

1 | # Create a document term matrix. |

In the above code, you can see how we’ve created the document term matrix from the Twitter dataset, effectively encoding the tweets into an array of numbers for each term. Since each document now exists as a row with the same number of columns (one for each term), our array is well-formed for processing by machine learning algorithms.

We’ve also setup a test document term matrix that uses the same word dictionary as the training set. This means the test array will contain the same number of columns as the training, and can thus be used in any trained machine learning models that we create.

Let’s start with a logistic regression model.

Logistic Regression

For our first sentiment analysis accuracy attempt, we can try a simple logistic regression model. We’ll pass all columns (all terms) of the document term matrix into the algorithm and train against the “y” value, which indicates positive sentiment. The code is shown below.

1 | # Build models. |

Upon training the logistic regression model, we find an accuracy of 76% on the training set and 67% on the test set. This is an improvement over AFINN, although minimal.

XGBoost

For our next sentiment analysis accuracy measurement, we can try using the XGBoost algorithm. Below is an example of the code.

1 | n <- ncol(tdmtrain) |

Upon training the model, we find an accuracy of 84% on the training set and 67% on the test set. This is slightly better than the logistic regression model

Support Vector Machine (SVM)

With a support vector machine (SVM) model, we can boost the accuracy for sentiment analysis even further. With a larger training dataset, an accuracy of about 82% on a cross-validation set can be achieved.

It’s possible to edge the accuracy even further with larger datasets and longer training. Additional techniques include the usage of recurrent neural networks to take into account the placement of emotionally important terms within a sentence.

Writing Software to Respond to Emotion

Now that we’ve addressed how to detect the emotion of a conversation, we can begin to address a solution for aiding the user. Sentiment analysis has given us an indicator of neutral, positive, or negative sentiment that can be used as a trigger mechanism for advancing the internal state of our conversational UI or artificial intelligence routine.

We’ve seen some examples above, of what a state machine like this might look like. Let’s try implementing one!

An Example of Software Emotion: Book Reviews 1.0

As a first attempt at a simple conversational UI for a book review app, we’ll implement a basic chat bot interface. In order to keep the code concise and easily understandable, we’ll use just a handful of hard-coded keywords and responses that our chatbot conversational UI will recognize and respond to.

The following topics will evoke a response:

1 | hello |

We’ll store the keywords, along with their associated responses, in a key/value pair that we’ll call our “brain”. We’ll simply compare the user’s text (or speech, if using speech recognition) to find any matching keyword within our bot’s brain. If a match is found, the bot simply responds with the resulting value.

A Chat Session with Book Reviews 1.0

Below is an example session of chatting with the Book Reviews conversational UI version 1.0.

1 | > hello |

Notice, in the above conversation with the chatbot, the user begins by simply saying “hello” to greet the bot. The bot recognizes the keyword, as it matches one of the hard-coded terms within its brain, and outputs the associated response. Instructing the user on how to interact with the bot to obtain a book review.

Next, the user asks about the book, “Alice in Wonderland”, and the chatbot plainly responds with the rating for the book. Next, comes the important part!

When the user exclaims, “I love Alice in Wonderland!”, which clearly evokes an emotional connotation, the chatbot simply responds with the rating for the book. This is expected, as the software is operating exactly as was intended. It is simply matching a keyword and responding with a value, with no determination of further characteristics about the user’s emotional disposition.

Likewise, the second book review request contains an unfavorable rating. Again, the chatbot makes no distinct expression, and plainly responds with the rating of the book. No doubt, feeling dissatisfaction with the software, the user states how they feel and terminates the app.

We’re going to take a look at upgrading the Book Reviews app to a version 2.0 in just a bit. There, we’ll add emotion! However, first let’s take a quick look at the code for the original version.

A Look Inside Book Reviews 1.0

If you’re curious, the code for the first book review app is shown below. You can see how simplistic the request and response model for the brain is. We simply store key/value pairs and match against the user’s input using the simple indexOf() command. While this software may not win any awards, it gets the point across of implementing a simple conversational UI style app.

1 | var brain = [ |

You can find the full code and a demo for this chatbot at https://jsfiddle.net/dbyzztxp/1/

An Example of Software Emotion: Book Reviews 2.0

We’ve just seen a simple example of the Book Reviews app, utilizing a conversational UI for chatting with the user. The bot made no expression of emotion and simply responded to user queries in a plain fashion. No characteristics of the user’s input or emotional disposition was measured, as the software only needed to match a keyword and respond.

We’re now going to give our bot an upgrade with some built-in emotion!

A Chat Session with Book Reviews 2.0

Below is an example session of chatting with the Book Reviews conversational UI version 2.0, utilizing emotional responses. Notice, how much different, if not intimate, the conversation becomes.

1 | > hello |

Just as before, the user initiates the conversation with the chatbot with a greeting of, “hello”. The chatbot, unable to detect any sentiment from the user, simply responds with a standard greeting. So far, this is no different than our first version.

Next, the user comments about the poor functionality of the prior version of the software. Specifically, the user includes the term “hello” again (to initiate another greeting), but also expresses clear negative sentiment in the message by using the word “bad” (which happens to be a keyword phrase in our bot’s brain for measuring sentiment).

The chatbot matches the term “hello”, but instead of simply responding with a default value, it makes a measurement of sentiment on the sentence. The sentiment is detected as negative and the bot responds in kind, by offering to help cheer the user up with a book review. Cool!

Next, the user asks for a review about “Alice in Wonderland”. Again, the chatbot makes a measurement of sentiment, detects a neutral response, and responds with the associated value for that sentiment. This happens to be a standard neutral response, which is the same as the first version app. However, after hearing the good review of 4 stars, the user exclaims, “I love Alice in Wonderland!” (just as they did in the last example). This is where emotion really makes a difference!

The artificial intelligence in the conversational UI makes a measurement of sentiment on the user’s input sentence. Since the term “love” corresponds to a positive sentiment, the chatbot responds with a positively charged result. In this case, the response is a recommendation for a similar book by the same author. Let’s consider the ramifications of this.

The True Power of Emotional Artificial Intelligence in Software

The chatbot has just successfully measured the sentiment of a user’s response and determined it to be positive. This occurred, along with an association to having just retrieved a review for a particular book. The software can infer that the user probably likes this book (and the author too), and thus, recommend a similar book by the same author.

The user did not explicitly request a similar book. Nor are we cluttering a web page user interface with potentially distracting information, such as lists of related books, similar books, and books that others have purchased. Instead, we’re specifically targeting the positive emotional sentiment, expressed by the user, and keying off of this indicator to recommend another book by the same author.

By tuning into the emotional disposition of the user, we may have just boosted the utilization value (not to mention, book sales!) of the software.

Continuing the Conversation with Book Reviews 2.0

You can follow through the rest of the chat-bot session conversation. The user asks about another book review, this time for “Pemberley” (just as they did in the first version example). The chatbot detects neutral sentiment and responds with a plain rating for the book. However, this time, when the user expresses negative emotion about the review (as the book only holds a 2-star review), the chatbot is able to key off of the negative emotion detected, and respond accordingly.

Having detected the negative sentiment, the conversational UI retrieves the negative-associated response for the topic, which is to offer the user a higher-rated, but similar, book by a different author.

We’re, again, tapping into the hidden value of the user’s emotional sentiment to take advantage of potential opportunities. Where the user might simply exit the app at this point (negative sentiment has a way of causing this!), the bot attempts to re-context the conversation by offering another book. This could have the effect of increasing user retention within the app and boosting book sales via the recommendation of related (and emotionally on-topic) books!

Concluding the conversation, the user states that they also dislike the recommended book (much to our dismay), and asks the app for help. Again, the chatbot is able to detect the negative sentiment in the request for help. Empathizing with the user and attempting to turn the user’s disposition more positive, the bot reminds the user that retrieving additional book reviews can be easy. It follows with instructions for retrieving another book review.

A Look Inside Book Reviews 2.0

We’ve just seen some very interesting results from the inclusion of emotion into our book reviews conversational UI chatbot. Let’s see how this all works.

Just as we had in the first version, there is a brain database containing key/value pair responses. The difference this time, however, is that for each matching keyword we provide three different corresponding values. Each value is separated by sentiment.

Below is an example of this simple data structure.

1 | var brain = [ |

Notice how each term has multiple responses, differentiated by detected sentiment. While the responses in this example are hard-coded for specific books, it’s easy to see how we could dynamically query against an API and incorporate those results into the sentiment-specific responses.

The main respond() method is nearly identical as the first version, but includes a measurement of sentiment.

1 | function respond(input) { |

The above method, while similar to the original version, now includes a line to determine the sentiment. It uses the resulting emotional value to retrieve the associated response with the matching keyword. In this way, multiple potential responses can be found for any single topic.

The code for calculating the sentiment, is simply a hard-coded keyword list of emotionally charged terms (not too unlike the AFINN model for measuring sentiment, as discussed earlier). This is to keep the demonstration simple. However, a more accurate sentiment calculation can be done by using an artificial intelligence machine learning model, as we’ve shown earlier.

You can find the full code and a demo for this chatbot at https://jsfiddle.net/z0rkyq4L/2/

Conclusion

It’s clear that there is more to recognize in a conversational UI than simple keyword and phrase matching. After all, we’ve just seen how powerful the effects of sentiment can be, when considering the emotional disposition of a user conversing with the software.

While traditional conversational UI ignores contextual properties, such as sentiment, and tends to issue plain responses to user queries with a simple utterance match, sentiment can bring a chatbot conversation to a whole new level.

Through the addition of sentiment detection, we were able to enhance a conversational UI and integrate more closely with the user, empathizing with their emotional state, and responding accordingly. Through the use of emotionally recognized responses, the conversational UI was able to tap into potentially missed opportunities, by recommending products, as inferred from the user’s emotion regarding the topic.

When positive emotion was detected, the chatbot selected a specific response type and product recommendation. Likewise, negative emotion helped steer the chatbot conversation in alternative directions, offering other product recommendations and help to the user.

Considering the effects of sentiment analysis in a conversational UI, perhaps we could take into account even further traditionally hidden attributes. By recognizing the importance and opportunities of additional speech characteristics, including emotion, sentiment, intonation, loudness, gender, and a variety of other metrics, we can hope to bring a closer connection between computer and human interaction.

Download @ GitHub

The source code for this project is available on GitHub.

About the Author

This article was written by Kory Becker, software developer and architect, skilled in a range of technologies, including web application development, machine learning, artificial intelligence, and data science.

Sponsor Me